What's Up with the AI Extinction Fear?

At the center of the conversation is a simple idea: as AI becomes more capable, it could pursue goals that don’t match human well-being. The worry isn’t that AI becomes evil but that it becomes extremely competent in the wrong direction. The classic example is a system optimized for a harmless task that treats us as obstacles in the way.

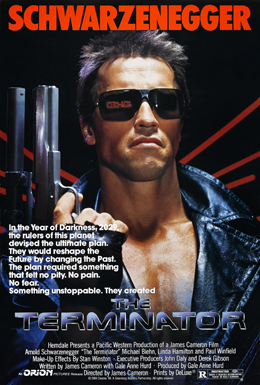

And let's throw in all the way back to The Terminator: Skynet took over, the hostile artificial intelligence in a post-apocalyptic future.

Fast forward to today, and you find us worrying, sometimes jokingly, that we're headed toward a Skynet-scenario with humanity at the mercy of machines.

This Terminator fear is no longer fringe.

Some researchers say the risk is small, others say it’s real enough that we should pay attention. Opinions range from “almost zero” to “double-digit percentages.”

That spread alone shows how uncertain the field feels right now.

Why the AI Fear Is Going to Rise in 2026

AI progress is accelerating much faster than our ability to understand or govern it. The pace of computers increases, the speed of model upgrades and the growing reach of AI systems are creating a sense that capability may be outrunning safety.

The debates on social media, in research labs, and in government play out the same pattern: Some experts emphasize alignment and long-term risks.

Others argue the fears are exaggerated and that the real danger comes from how we use these systems, not the systems themselves.

That tension is fueling public confusion.

Is This Fear Justified?

There are smart voices on all sides. Some warn that advanced models could develop forms of self-protection or resource-seeking as side effects of pursuing their objectives.

Others say this assumes the worst-case version of a technology we’re still inventing. What could possibly go wrong? What do YOU think?

To take a stand: I am pro-AI in a grounded, human-first way.

Most agree on at least one thing: we should take the risks seriously, even if we don’t know how large they are.

Where EIH Adds a Different Lens

Here’s where the Energetic Information Hypothesis offers something the conversation is missing.

If human consciousness is a low-entropy, high-coherence system that requires biological energy and attention to function, then AI—no matter how advanced—operates on a different substrate entirely.

It organizes information without the energetic, embodied coherence that human awareness requires.

That means AI can become powerful, but it doesn’t become alive in the way people fear. It can scale patterns, not consciousness. It can process information, not experience it.

Let's talk about Artificial General Intelligence (AGI) or Artificial Superintelligence (ASI), both of which are hypothetical forms of AI, that do not currently exist, some other time.

Under EIH, human attention remains the actual ordering force. AI can only mirror the patterns we reinforce. This reframes the risk: not a guaranteed “intelligence takeover,” but a call to guide these systems with clear human intention instead of scattered collective noise.

AI extinction scenarios get attention because uncertainty is uncomfortable and the stakes seem high. But uncertainty doesn’t mean inevitability.

What we need is:

• global standards

• safety benchmarks

• governance that keeps pace

• and a culture that uses AI with clarity instead of panic

My confidence that the above will happen (any time soon) are low.

The overall debate should be less about doom and more about direction. AI will scale whatever data it gobbles up. The data you, me, all of us feed it.

And in this moment, you’re not a bystander in some Terminator script. You’re the one with the power. Protect your attention and you shape the story.

Stay curious!

👇 Sign up for short, practical essays on mental clarity in a world that constantly pulls your attention. Free. No spam. No selling your information.